部署环境

| IP地址 |

主机名 |

功能 |

|---|---|---|

| 10.1.32.230 | k8s-deploy-test | 部署节点,不承担实际作用 |

| 10.1.32.231 | k8s-master-test01 | master节点 |

| 10.1.32.232 | k8s-master-test02 | master节点 |

| 10.1.32.233 | k8s-master-test03 | master节点 |

| 10.1.32.240 | k8s-nginx-test | 负载均衡节点,实际生产中应为HA架构 |

| 10.1.32.234 | k8s-node01-test01 | node节点 |

| 10.1.32.235 | k8s-node02-test02 | node节点 |

| 10.1.32.236 | k8s-node03-test03 | node节点 |

高可用部署kube-scheduler集群

Kubernetes调度器是一个策略丰富、拓扑感知、工作负载特定的功能,调度器显著影响可用性、性能和容量。

Kubernetes中调度器的指责主要是为新创建的Pod在集群中寻找最合适的node,并将Pod调度到Node上。

创建kube-scheduler证书和私钥并分发到各节点(k8s-deploy):

创建kube-scheduler的证书请求文件

cd /opt/k8s/work

source /opt/k8s/bin/environment.sh

cat > kube-scheduler-csr.json << EOF

{

"CN": "system:kube-scheduler",

"hosts": [

"127.0.0.1",

"10.1.32.231",

"10.1.32.232",

"10.1.32.233",

"10.1.32.240"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Shanghai",

"L": "Shanghai",

"O": "system:kube-scheduler",

"OU": "ops"

}

]

}

EOF- hosts与O必须都为”system:kube-scheduler”。

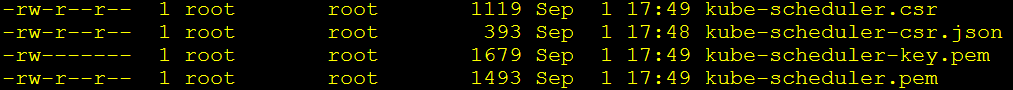

生成kube-scheduler证书,如下图:

cfssl gencert -ca=/opt/k8s/work/ca.pem \

-ca-key=/opt/k8s/work/ca-key.pem \

-config=/opt/k8s/work/ca-config.json \

-profile=kubernetes kube-scheduler-csr.json | cfssljson -bare kube-scheduler将kube-scheduler证书分发到各节点:

cd /opt/k8s/work

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_MASTER_IPS[@]}

do

echo ">>> ${node_ip}"

scp kube-scheduler*.pem root@${node_ip}:/etc/kubernetes/cert/

done

创建kube-scheduler的kubeconfig文件(k8s-deploy):

设置kubeconfig文件的集群参数:

cd /opt/k8s/work

source /opt/k8s/bin/environment.sh

kubectl config set-cluster kubernetes \

--certificate-authority=/opt/k8s/work/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kube-scheduler.kubeconfig创建kubeconfig文件使用的用户账户:

kubectl config set-credentials system:kube-scheduler \

--client-certificate=kube-scheduler.pem \

--client-key=kube-scheduler-key.pem \

--embed-certs=true \

--kubeconfig=kube-scheduler.kubeconfig设置kubeconfig文件上下文参数:

kubectl config set-context system:kube-scheduler \

--cluster=kubernetes \

--user=system:kube-scheduler \

--kubeconfig=kube-scheduler.kubeconfig设置kubeconfig文件默认上下文:

kubectl config use-context system:kube-scheduler --kubeconfig=kube-scheduler.kubeconfig将kube-scheduler使用的kubeconfig文件分发到各master节点:

cd /opt/k8s/work

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_MASTER_IPS[@]}

do

echo ">>> ${node_ip}"

scp kube-scheduler.kubeconfig root@${node_ip}:/etc/kubernetes/

done创建kube-scheduler配置文件并分发到各节点(k8s-deploy):

创建kube-scheduler配置文件:

cd /opt/k8s/work

source /opt/k8s/bin/environment.sh

cat > kube-scheduler.yaml << EOF

apiVersion: kubescheduler.config.k8s.io/v1alpha1

kind: KubeSchedulerConfiguration

bindTimeoutSeconds: 600

clientConnection:

burst: 200

kubeconfig: "/etc/kubernetes/kube-scheduler.kubeconfig"

qps: 100

enableContentionProfiling: false

enableProfiling: true

hardPodAffinitySymmetricWeight: 1

healthzBindAddress: 0.0.0.0:10251

leaderElection:

leaderElect: true

metricsBindAddress: 0.0.0.0:10251

EOF- healthzBindAddress,metricsBindAddress,绑定地址不要写成主机的ip地址,不然kubectl get cs会报错。

将kube-scheduler配置文件分发到各master节点:

cd /opt/k8s/work

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_MASTER_IPS[@]}

do

echo ">>> ${node_ip}"

scp kube-scheduler.yaml root@${node_ip}:/etc/kubernetes/kube-scheduler.yaml

done创建kube-scheduler服务文件并分发到各节点(k8s-deploy):

创建kube-scheduler服务文件:

cd /opt/k8s/work

source /opt/k8s/bin/environment.sh

cat > kube-scheduler.service.template << EOF

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

[Service]

WorkingDirectory=${K8S_DIR}/kube-scheduler

ExecStart=/opt/k8s/bin/kube-scheduler \\

--config=/etc/kubernetes/kube-scheduler.yaml \\

--bind-address=##NODE_IP## \\

--secure-port=10259 \\

--port=0 \\

--tls-cert-file=/etc/kubernetes/cert/kube-scheduler.pem \\

--tls-private-key-file=/etc/kubernetes/cert/kube-scheduler-key.pem \\

--authentication-kubeconfig=/etc/kubernetes/kube-scheduler.kubeconfig \\

--client-ca-file=/etc/kubernetes/cert/ca.pem \\

--requestheader-allowed-names="aggregator" \\

--requestheader-client-ca-file=/etc/kubernetes/cert/ca.pem \\

--requestheader-extra-headers-prefix="X-Remote-Extra-" \\

--requestheader-group-headers=X-Remote-Group \\

--requestheader-username-headers=X-Remote-User \\

--authorization-kubeconfig=/etc/kubernetes/kube-scheduler.kubeconfig \\

--logtostderr=true \\

--v=2

Restart=always

RestartSec=5

StartLimitInterval=0

[Install]

WantedBy=multi-user.target

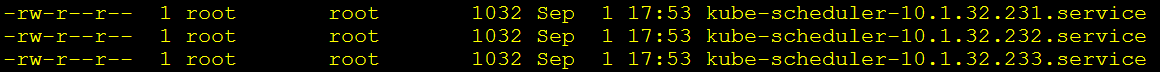

EOF为各节点生成kube-scheduler服务文件:

cd /opt/k8s/work

source /opt/k8s/bin/environment.sh

for (( i=0; i < 3; i++ ))

do

sed -e "s/##NODE_NAME##/${NODE_MASTER_NAMES[i]}/" -e "s/##NODE_IP##/${NODE_MASTER_IPS[i]}/" kube-scheduler.service.template > kube-scheduler-${NODE_MASTER_IPS[i]}.service

done

将kube-scheduler服务文件分发到各节点:

cd /opt/k8s/work

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_MASTER_IPS[@]}

do

echo ">>> ${node_ip}"

scp kube-scheduler-${node_ip}.service root@${node_ip}:/etc/systemd/system/kube-scheduler.service

done启动kube-scheduler服务并验证(k8s-deploy):

启动kube-scheduler-manager服务:

cd /opt/k8s/work

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_MASTER_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "mkdir -p ${K8S_DIR}/kube-scheduler"

ssh root@${node_ip} "systemctl daemon-reload && systemctl enable kube-scheduler && systemctl restart kube-scheduler"

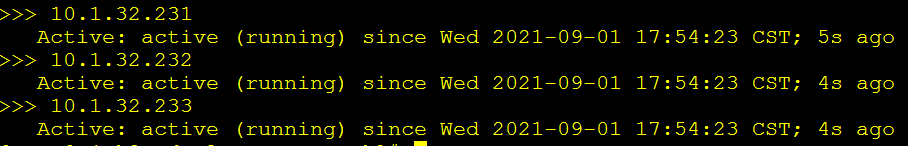

done验证kube-scheduler服务状态:

cd /opt/k8s/work

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_MASTER_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "systemctl status kube-scheduler | grep Active"

done

通过curl命令获取监控参数:

# 以https方式启动:

curl -s --cacert /opt/k8s/work/ca.pem --cert /opt/k8s/work/admin.pem --key /opt/k8s/work/admin-key.pem \

https://10.1.32.231:10259/metrics | head

# 以http方式启动:

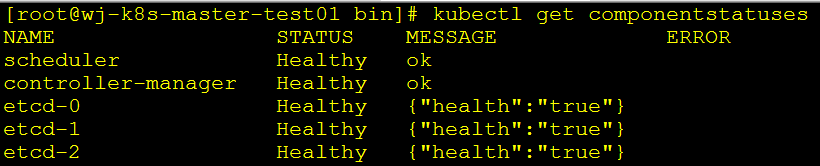

curl -s http://10.1.32.231:10251/metrics | head在任意一台master节点上执行以下命令,检测所有组件是否都正常启动:

kubectl get componentstatuses- 如果kube-controller-manager使用https方式启动,则健康检查可能不通过。

文档更新时间: 2021-09-03 14:30 作者:闻骏