部署环境

| IP地址 |

主机名 |

功能 |

|---|---|---|

| 10.1.104.200 | k8s-deploy | 部署节点,不承担实际作用 |

| 10.1.104.201 | k8s-master01 | master节点 |

| 10.1.104.202 | k8s-master02 | master节点 |

| 10.1.104.203 | k8s-master03 | master节点 |

| 10.1.104.204 | k8s-nginx | 负载均衡节点,实际生产中应为HA架构 |

| 10.1.104.205 | k8s-node01 | node节点 |

| 10.1.104.206 | k8s-node02 | node节点 |

| 10.1.104.207 | k8s-node03 | node节点 |

部署kubelet

kubelet运行在每个worker节点上,接收kube-apiserver发送的请求,管理Pod容器,执行交互式命令,如exec、run、logs等。

kubelet启动时自动向kube-apiserver注册节点信息,内置的cadvisor统计和监控节点的资源使用情况。

创建kubelet的配置文件并分发到各节点(k8s-deploy):

生成kubelet使用的配置文件:

cd /opt/k8s/work

source /opt/k8s/bin/environment.sh

for node_name in ${NODE_NODE_NAMES[@]}

do

echo ">>> ${node_name}"

export BOOTSTRAP_TOKEN=$(/opt/k8s/work/kubernetes/server/bin/kubeadm token create \

--description kubelet-bootstrap-token \

--groups system:bootstrappers:${node_name} \

--kubeconfig kubectl.kubeconfig)

kubectl config set-cluster kubernetes \

--certificate-authority=/opt/k8s/work/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kubelet-bootstrap-${node_name}.kubeconfig

kubectl config set-credentials kubelet-bootstrap \

--token=${BOOTSTRAP_TOKEN} \

--kubeconfig=kubelet-bootstrap-${node_name}.kubeconfig

kubectl config set-context default \

--cluster=kubernetes \

--user=kubelet-bootstrap \

--kubeconfig=kubelet-bootstrap-${node_name}.kubeconfig

kubectl config use-context default --kubeconfig=kubelet-bootstrap-${node_name}.kubeconfig

done

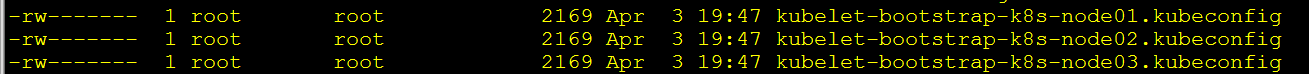

查看kubeadm为各节点创建的token:

/opt/k8s/work/kubernetes/server/bin/kubeadm token list --kubeconfig kubectl.kubeconfig

- token有效期为1天,超期后将不能再被用来boostrap kubelet,且会被kube-controller-manager的 tokencleaner清理。

- kube-apiserver接收kubelet的bootstrap token后,将请求的user设置为system:bootstrap:Token ID,group设置为system:bootstrappers,后续将为这个 group设置ClusterRoleBinding。

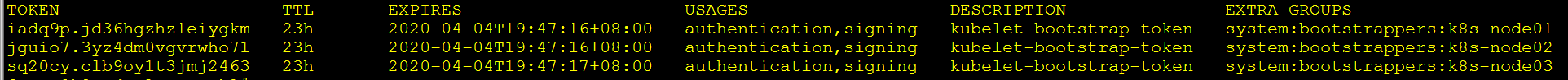

将kubelet的kubeconfig文件分发到各worker节点:

cd /opt/k8s/work

source /opt/k8s/bin/environment.sh

for node_name in ${NODE_NODE_NAMES[@]}

do

echo ">>> ${node_name}"

scp kubelet-bootstrap-${node_name}.kubeconfig root@${node_name}:/etc/kubernetes/kubelet-bootstrap.kubeconfig

done创建kubelet的参数配置文件并分发到各节点(k8s-deploy)(新节点需要):

创建kubelet的参数配置文件:

cd /opt/k8s/work

source /opt/k8s/bin/environment.sh

cat > kubelet-config.yaml.template << EOF

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

address: "##NODE_IP##"

staticPodPath: ""

syncFrequency: 1m

fileCheckFrequency: 20s

httpCheckFrequency: 20s

staticPodURL: ""

port: 10250

readOnlyPort: 0

rotateCertificates: true

serverTLSBootstrap: true

authentication:

anonymous:

enabled: false

webhook:

enabled: true

x509:

clientCAFile: "/etc/kubernetes/cert/ca.pem"

authorization:

mode: Webhook

registryPullQPS: 0

registryBurst: 20

eventRecordQPS: 0

eventBurst: 20

enableDebuggingHandlers: true

enableContentionProfiling: true

healthzPort: 10248

healthzBindAddress: "##NODE_IP##"

clusterDomain: "${CLUSTER_DNS_DOMAIN}"

clusterDNS:

- "${CLUSTER_DNS_SVC_IP}"

nodeStatusUpdateFrequency: 10s

nodeStatusReportFrequency: 1m

imageMinimumGCAge: 2m

imageGCHighThresholdPercent: 85

imageGCLowThresholdPercent: 80

volumeStatsAggPeriod: 1m

kubeletCgroups: ""

systemCgroups: ""

cgroupRoot: ""

cgroupsPerQOS: true

cgroupDriver: cgroupfs

runtimeRequestTimeout: 10m

hairpinMode: promiscuous-bridge

maxPods: 220

podCIDR: "${CLUSTER_CIDR}"

podPidsLimit: -1

resolvConf: /etc/resolv.conf

maxOpenFiles: 1000000

kubeAPIQPS: 1000

kubeAPIBurst: 2000

serializeImagePulls: false

evictionHard:

memory.available: "100Mi"

nodefs.available: "10%"

nodefs.inodesFree: "5%"

imagefs.available: "15%"

evictionSoft: {}

enableControllerAttachDetach: true

failSwapOn: true

containerLogMaxSize: 20Mi

containerLogMaxFiles: 10

systemReserved: {}

kubeReserved: {}

systemReservedCgroup: ""

kubeReservedCgroup: ""

enforceNodeAllocatable: ["pods"]

EOF- address,kubelet安全端口(https,10250)监听的地址,不能为 127.0.0.1,否则 kube-apiserver、heapster等不能调用kubelet的API。

- readOnlyPort=0,关闭只读端口(默认 10255),等效为未指定。

- authentication.anonymous.enabled,设置为false,不允许匿名访问10250端口。

- authentication.x509.clientCAFile,指定签名客户端证书的CA证书,开启HTTP证书认证。

- authentication.webhook.enabled=true,开启HTTPs bearer token认;对于未通过x509证书和webhook认证的请求(kube-apiserver或其他客户端),将被拒绝。

- authroization.mode=Webhook,kubelet使用SubjectAccessReview API查询kube-apiserver某user、group是否具有操作资源的权限(RBAC)。

将ubelet的参数配置文件 分发到各worker节点:

cd /opt/k8s/work

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_NODE_IPS[@]}

do

echo ">>> ${node_ip}"

sed -e "s/##NODE_IP##/${node_ip}/" kubelet-config.yaml.template > kubelet-config-${node_ip}.yaml.template

scp kubelet-config-${node_ip}.yaml.template root@${node_ip}:/etc/kubernetes/kubelet-config.yaml

done创建kubelet的服务文件并分发到各节点(k8s-deploy)(新节点需要):

创建kubelet服务文件模版:

cd /opt/k8s/work

source /opt/k8s/bin/environment.sh

cat > kubelet.service.template << EOF

[Unit]

Description=Kubernetes Kubelet

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=docker.service

Requires=docker.service

[Service]

WorkingDirectory=${K8S_DIR}/kubelet

ExecStart=/opt/k8s/bin/kubelet \\

--allow-privileged=true \\

--bootstrap-kubeconfig=/etc/kubernetes/kubelet-bootstrap.kubeconfig \\

--cert-dir=/etc/kubernetes/cert \\

--cni-conf-dir=/etc/cni/net.d \\

--container-runtime=docker \\

--container-runtime-endpoint=unix:///var/run/dockershim.sock \\

--root-dir=${K8S_DIR}/kubelet \\

--kubeconfig=/etc/kubernetes/kubelet.kubeconfig \\

--config=/etc/kubernetes/kubelet-config.yaml \\

--hostname-override=##NODE_NAME## \\

--pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.1 \\

--image-pull-progress-deadline=15m \\

--volume-plugin-dir=${K8S_DIR}/kubelet/kubelet-plugins/volume/exec/ \\

--logtostderr=true \\

--v=2

Restart=always

RestartSec=5

StartLimitInterval=0

[Install]

WantedBy=multi-user.target

EOF- --hostname-override,如果设置了该选择,kube-proxy也需要设置该选项,否则会出现找不到node的情况。

- --bootstrap-kubeconfig,指向bootstrap kubeconfig文件,kubelet使用该文件中的用户名和token向kube-apiserver发送TLS Bootstrapping请求。

- --cert-dir,K8S approve kubelet的csr请求后,在该目录创建证书和私钥文件。

- --kubeconfig,K8S approve kubelet的csr请求后,将配置写入该文件。

- --pod-infra-container-image,不使用redhat的pod-infrastructure:latest镜像,它不能回收容器的僵尸。

将kubelet服务文件分发到各node节点:

cd /opt/k8s/work

source /opt/k8s/bin/environment.sh

for node_name in ${NODE_NODE_NAMES[@]}

do

echo ">>> ${node_name}"

sed -e "s/##NODE_NAME##/${node_name}/" kubelet.service.template > kubelet-${node_name}.service

scp kubelet-${node_name}.service root@${node_name}:/etc/systemd/system/kubelet.service

done创建一个clusterrolebinding,将group system:bootstrappers和clusterrole system:node-bootstrapper绑定(任意一台master上执行):

kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --group=system:bootstrappers- kubelet启动时查找--kubeletconfig参数对应的文件是否存在,如果不存在则使用--bootstrap-kubeconfig指定的kubeconfig文件向kube-apiserver发送证书签名请求 (CSR)。

- kube-apiserver收到CSR请求后,对其中的Token进行认证,认证通过后将请求的user设置为system:bootstrap:Token ID,group设置为system:bootstrappers,这一过程称为Bootstrap Token Auth。

- 默认情况下,这个user和group没有创建CSR的权限,kubelet启动失败,因此需要创建以上clusterrolebinding。

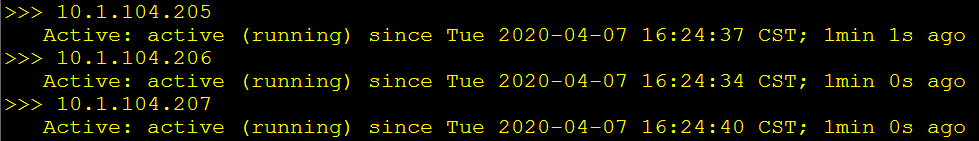

启动kubelet并验证(k8s-deploy):

启动各node节点上的kubelet服务:

cd /opt/k8s/work

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "mkdir -p ${K8S_DIR}/kubelet/kubelet-plugins/volume/exec/"

ssh root@${node_ip} "/usr/sbin/swapoff -a"

ssh root@${node_ip} "systemctl daemon-reload && systemctl enable kubelet && systemctl restart kubelet"

done查看各节点的kubelet是否正常运行:

for node_ip in ${NODE_NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "systemctl status kubelet | grep Active"

done

- kubelet启动后会监听2个端口:

- 10248,healthz http服务。

- 10250,https服务,访问该端口时需要认证和授权(即使访问 /healthz 也需要)。

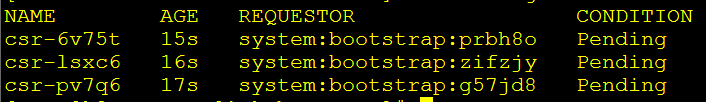

获取当前的节点申请,可见csr当前处于pending状态(任意一台master节点执行):

kubectl get csr

接收CSR请求(k8s-master):

配置自动授权csr请求:

cd /opt/k8s/work

source /opt/k8s/bin/environment.sh

cat > /opt/k8s/work/csr-crb.yaml << EOF

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: auto-approve-csrs-for-group

subjects:

- kind: Group

name: system:bootstrappers

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: ClusterRole

name: system:certificates.k8s.io:certificatesigningrequests:nodeclient

apiGroup: rbac.authorization.k8s.io

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: node-client-cert-renewal

subjects:

- kind: Group

name: system:nodes

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: ClusterRole

name: system:certificates.k8s.io:certificatesigningrequests:selfnodeclient

apiGroup: rbac.authorization.k8s.io

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: approve-node-server-renewal-csr

rules:

- apiGroups: ["certificates.k8s.io"]

resources: ["certificatesigningrequests/selfnodeserver"]

verbs: ["create"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: node-server-cert-renewal

subjects:

- kind: Group

name: system:nodes

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: ClusterRole

name: approve-node-server-renewal-csr

apiGroup: rbac.authorization.k8s.io

EOF- auto-approve-csrs-for-group,自动approve节点的第一次CSR,注意第一次CSR时,请求的Group为system:bootstrappers。

- node-client-cert-renewal,自动approve节点后续过期的client证书,自动生成的证书Group为system:nodes。

- node-server-cert-renewal,自动approve节点后续过期的server证书,自动生成的证书Group为system:nodes。

通过kubectl命令应用该配置文件:

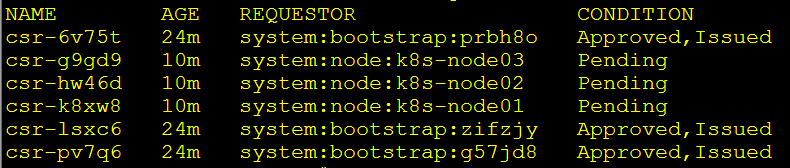

kubectl apply -f csr-crb.yaml稍等大约10分钟,可以看到bootstrap的证书申请已经自动批准,但kubelet server的证书请求需要手动批准:

kubectl get csr

手动批准kubelet server的请求:

kubectl certificate approve csr-g9gd9

kubectl certificate approve csr-hw46d

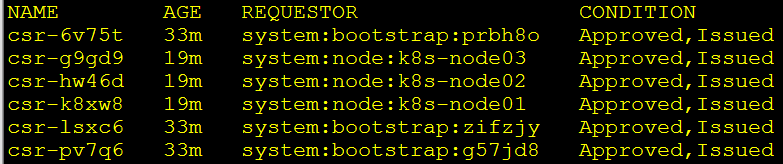

kubectl certificate approve csr-k8xw8再次查看csr请求:

kubectl get csr

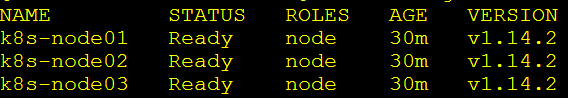

查看各节点情况,并为node节点打上标签:

kubectl get nodes

kubectl label nodes k8s-node01 node-role.kubernetes.io/node=

kubectl label nodes k8s-node02 node-role.kubernetes.io/node=

kubectl label nodes k8s-node03 node-role.kubernetes.io/node=

kubectl get nodes

- 如果需要删除标签,可以使用kubectl label nodes k8s-node01 node-role.kubernetes.io/node-命令。

通过证书验证验证kubelet的鉴权和监控数据获取(k8s-deploy):

for node_ip in ${NODE_NODE_IPS[@]}

do

echo ">>> ${node_ip}"

curl -s --cacert /opt/k8s/work/ca.pem --cert /opt/k8s/work/admin.pem --key /opt/k8s/work/admin-key.pem https://${node_ip}:10250/metrics | head

done通过kube-apiserver获取节点配置(k8s-deploy):

curl -sSL --cacert /opt/k8s/work/ca.pem --cert /opt/k8s/work/admin.pem --key /opt/k8s/work/admin-key.pem \

https://10.1.104.204:8443/api/v1/nodes/k8s-node01/proxy/configz | jq \

'.kubeletconfig|.kind="KubeletConfiguration"|.apiVersion="kubelet.config.k8s.io/v1beta1"'文档更新时间: 2021-09-02 12:48 作者:闻骏