部署环境

| IP地址 |

主机名 |

功能 |

|---|---|---|

| 10.1.32.230 | k8s-deploy-test | 部署节点,不承担实际作用 |

| 10.1.32.231 | k8s-master-test01 | master节点 |

| 10.1.32.232 | k8s-master-test02 | master节点 |

| 10.1.32.233 | k8s-master-test03 | master节点 |

| 10.1.32.240 | k8s-nginx-test | 负载均衡节点,实际生产中应为HA架构 |

| 10.1.32.234 | k8s-node01-test01 | node节点 |

| 10.1.32.235 | k8s-node02-test02 | node节点 |

| 10.1.32.236 | k8s-node03-test03 | node节点 |

高可用部署kube-controller-manager

Kubernetes Controller Manager是一个守护进程,内嵌随Kubernetes一起发布的核心控制回路,用于调节系统状态。

在Kubernetes中,每个控制器是一个控制回路,通过API服务器监视集群的共享状态,并尝试进行更改以将当前状态转为期望状态。

目前,Kubernetes自带的控制器例子包括副本控制器、节点控制器、命名空间控制器和服务账号控制器等。

创建kube-controller-manager证书和私钥并分发到各节点(k8s-deploy):

创建kube-controller-manager证书签名请求:

cd /opt/k8s/work

source /opt/k8s/bin/environment.sh

cat > kube-controller-manager-csr.json << EOF

{

"CN": "system:kube-controller-manager",

"key": {

"algo": "rsa",

"size": 2048

},

"hosts": [

"127.0.0.1",

"10.1.32.231",

"10.1.32.232",

"10.1.32.233",

"10.1.32.240"

],

"names": [

{

"C": "CN",

"ST": "Shanghai",

"L": "Shanghai",

"O": "system:kube-controller-manager",

"OU": "ops"

}

]

}

EOF- ”CN”和”O”必须为:system:kube-controller-manager,该账户调用ClusterRoleBindings。

- hosts为所有kube-controller-manager节点的列表。

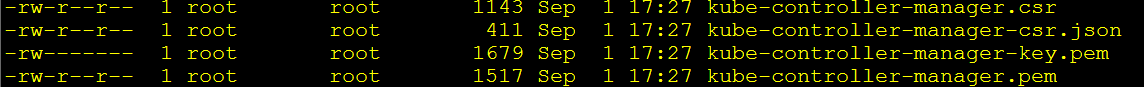

创建kube-controller-manager证书:

cfssl gencert -ca=/opt/k8s/work/ca.pem \

-ca-key=/opt/k8s/work/ca-key.pem \

-config=/opt/k8s/work/ca-config.json \

-profile=kubernetes kube-controller-manager-csr.json | cfssljson -bare kube-controller-manager

将kube-controller-manager证书分发到各master节点:

cd /opt/k8s/work

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_MASTER_IPS[@]}

do

echo ">>> ${node_ip}"

scp kube-controller-manager*.pem root@${node_ip}:/etc/kubernetes/cert/

done创建kubeconfig文件并分发到各节点(k8s-deploy):

设置kubeconfig文件的集群参数:

cd /opt/k8s/work

source /opt/k8s/bin/environment.sh

kubectl config set-cluster kubernetes \

--certificate-authority=/opt/k8s/work/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kube-controller-manager.kubeconfig创建kubeconfig文件使用的用户账户:

kubectl config set-credentials system:kube-controller-manager \

--client-certificate=kube-controller-manager.pem \

--client-key=kube-controller-manager-key.pem \

--embed-certs=true \

--kubeconfig=kube-controller-manager.kubeconfig设置kubeconfig文件上下文参数:

kubectl config set-context system:kube-controller-manager \

--cluster=kubernetes \

--user=system:kube-controller-manager \

--kubeconfig=kube-controller-manager.kubeconfig设置kubeconfig文件默认上下文:

kubectl config use-context system:kube-controller-manager --kubeconfig=kube-controller-manager.kubeconfig将kube-controller-manager使用的kubeconfig文件分发到各master节点:

cd /opt/k8s/work

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_MASTER_IPS[@]}

do

echo ">>> ${node_ip}"

scp kube-controller-manager.kubeconfig root@${node_ip}:/etc/kubernetes/

done创建kube-controller-manager服务文件并分发到各节点(k8s-deploy):

创建kube-controller-manager服务模板文件:

cd /opt/k8s/work

source /opt/k8s/bin/environment.sh

cat > kube-controller-manager.service.template << EOF

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

[Service]

WorkingDirectory=${K8S_DIR}/kube-controller-manager

ExecStart=/opt/k8s/bin/kube-controller-manager \\

--profiling \\

--cluster-name=kubernetes \\

--controllers=*,bootstrapsigner,tokencleaner \\

--kube-api-qps=1000 \\

--kube-api-burst=2000 \\

--leader-elect \\

--use-service-account-credentials\\

--concurrent-service-syncs=2 \\

--bind-address=##NODE_IP## \\

--tls-cert-file=/etc/kubernetes/cert/kube-controller-manager.pem \\

--tls-private-key-file=/etc/kubernetes/cert/kube-controller-manager-key.pem \\

--authentication-kubeconfig=/etc/kubernetes/kube-controller-manager.kubeconfig \\

--client-ca-file=/etc/kubernetes/cert/ca.pem \\

--requestheader-allowed-names="aggregator" \\

--requestheader-client-ca-file=/etc/kubernetes/cert/ca.pem \\

--requestheader-extra-headers-prefix="X-Remote-Extra-" \\

--requestheader-group-headers=X-Remote-Group \\

--requestheader-username-headers=X-Remote-User \\

--authorization-kubeconfig=/etc/kubernetes/kube-controller-manager.kubeconfig \\

--cluster-signing-cert-file=/etc/kubernetes/cert/ca.pem \\

--cluster-signing-key-file=/etc/kubernetes/cert/ca-key.pem \\

--experimental-cluster-signing-duration=876000h \\

--feature-gates=RotateKubeletServerCertificate=true \\

--horizontal-pod-autoscaler-sync-period=10s \\

--concurrent-deployment-syncs=10 \\

--concurrent-gc-syncs=30 \\

--node-cidr-mask-size=24 \\

--service-cluster-ip-range=${SERVICE_CIDR} \\

--pod-eviction-timeout=6m \\

--terminated-pod-gc-threshold=10000 \\

--root-ca-file=/etc/kubernetes/cert/ca.pem \\

--service-account-private-key-file=/etc/kubernetes/cert/ca-key.pem \\

--kubeconfig=/etc/kubernetes/kube-controller-manager.kubeconfig \\

--logtostderr=true \\

--v=2

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF- --port=0,关闭监听非安全端口(http),同时--address参数无效,--bind-address参数有效。

- --secure-port=10252,在所有网络接口监听10252端口的https/metrics请求。

- 以上2个参数开启,会导致执行kubectl get cs命令时报错,因为不能检测kube-controller-manager的http端口,但不影响集群正常工作。

- --kubeconfig,指定kubeconfig文件路径,kube-controller-manager使用它连接和验证kube-apiserver。

- --authentication-kubeconfig、--authorization-kubeconfig,kube-controller-manager使用它连接apiserver,对client的请求进行认证和授权。

- kube-controller-manager不再使用--tls-ca-file对请求https metrics的Client证书进行校验。

- 如果没有配置这两个kubeconfig参数,则client连接kube-controller-manager https端口的请求会被拒绝(提示权限不足)。

- --requestheader-allowed-names=”aggregator”,必须不为空,不然不能正常启动。

- --cluster-signing-*-file,签名TLS Bootstrap创建的证书。

- --experimental-cluster-signing-duration,指定TLS Bootstrap证书的有效期。

- --root-ca-file,放置到容器ServiceAccount中的CA证书,用来对kube-apiserver的证书进行校验。

- --service-account-private-key-file,签名ServiceAccount中Token的私钥文件;须和kube-apiserver的--service-account-key-file指定的公钥文件配对使用。

- --service-cluster-ip-range,指定Service Cluster IP网段,必须和kube-apiserver中的同名参数一致。

- --leader-elect=true,集群运行模式,启用选举功能;被选为leader的节点负责处理工作,其它节点为阻塞状态。

- --controllers=*,bootstrapsigner,tokencleaner,启用的控制器列表,tokencleaner用于自动清理过期的Bootstrap token。

- --horizontal-pod-autoscaler-*,custom metrics相关参数,支持autoscaling/v2alpha1。

- --tls-cert-file、--tls-private-key-file,使用https输出metrics时使用的Server证书和秘钥。

- --use-service-account-credentials=true,kube-controller-manager中各controller使用serviceaccount访问 kube-apiserver。

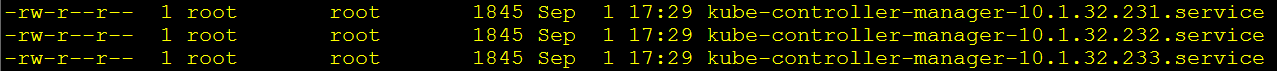

生成各节点自身的kube-controller-manager配置文件:

cd /opt/k8s/work

source /opt/k8s/bin/environment.sh

for (( i=0; i < 3; i++ ))

do

sed -e "s/##NODE_NAME##/${NODE_MASTER_NAMES[i]}/" -e "s/##NODE_IP##/${NODE_MASTER_IPS[i]}/" kube-controller-manager.service.template > kube-controller-manager-${NODE_MASTER_IPS[i]}.service

done

将kube-controller-manager配置文件复制到各节点:

cd /opt/k8s/work

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_MASTER_IPS[@]}

do

echo ">>> ${node_ip}"

scp kube-controller-manager-${node_ip}.service root@${node_ip}:/etc/systemd/system/kube-controller-manager.service

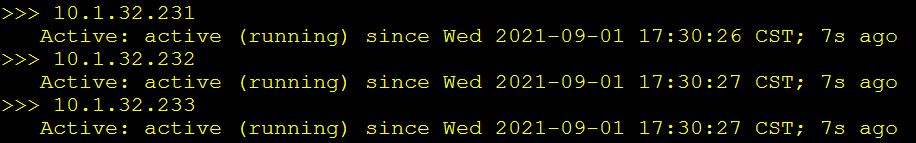

done启动kube-controller-manager服务并验证(k8s-deploy):

启动各节点的kube-controller-manager服务:

cd /opt/k8s/work

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_MASTER_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "mkdir -p ${K8S_DIR}/kube-controller-manager"

ssh root@${node_ip} "systemctl daemon-reload && systemctl enable kube-controller-manager && systemctl restart kube-controller-manager"

done验证各节点的kube-controller-manager是否启动:

for node_ip in ${NODE_MASTER_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "systemctl status kube-controller-manager|grep Active"

done

通过curl命令获取监控参数:

# 以https方式获取:

curl -s --cacert /opt/k8s/work/ca.pem --cert /opt/k8s/work/admin.pem --key /opt/k8s/work/admin-key.pem \

https://10.1.32.231:10257/metrics | head

# 以http方式获取:

curl -s http://10.1.32.231:10252/metrics | head文档更新时间: 2021-09-03 14:27 作者:闻骏