部署环境

| IP地址 |

主机名 |

功能 |

|---|---|---|

| 10.1.104.200 | k8s-deploy | 部署节点,不承担实际作用 |

| 10.1.104.201 | k8s-master01 | master节点 |

| 10.1.104.202 | k8s-master02 | master节点 |

| 10.1.104.203 | k8s-master03 | master节点 |

| 10.1.104.204 | k8s-nginx | 负载均衡节点,实际生产中应为HA架构 |

| 10.1.104.205 | k8s-node01 | node节点 |

| 10.1.104.206 | k8s-node02 | node节点 |

| 10.1.104.207 | k8s-node03 | node节点 |

| 10.1.104.208 | k8s-node04 | node节点(新节点) |

| 10.1.104.209 | k8s-node05 | node节点(新节点) |

系统初始化配置

添加hosts解析(k8s-deploy)(k8s-master):

cat >> /etc/hosts << EOF

10.1.104.208 k8s-node04

10.1.104.209 k8s-node05

EOF配置k8s-deploy节点免密登陆其他所有节点(k8s-deploy):

ssh-copy-id root@k8s-node04

ssh-copy-id root@k8s-node05检验域名解析与免密登录是否正常工作(k8s-deploy):

hostname=(k8s-node04 k8s-node05)

for node in ${hostname[@]}

do

ssh root@${node} "hostname"

done

添加环境变量(k8s-node):

echo 'PATH=/opt/k8s/bin:$PATH' >> /root/.bashrc

source /root/.bashrc安装依赖程序(k8s-node):

yum install -y epel-release

yum install -y conntrack ntpdate ntp ipvsadm ipset jq iptables curl sysstat libseccomp wget关闭swap分区(k8s-node):

swapoff -a

sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab加载内核模块(k8s-node):

modprobe ip_vs_rr

modprobe br_netfilter优化内核参数(k8s-node):

cat > /etc/sysctl.d/kubernetes.conf << EOF

net.bridge.bridge-nf-call-iptables=1

net.bridge.bridge-nf-call-ip6tables=1

net.ipv4.ip_forward=1

net.ipv4.tcp_tw_recycle=0

vm.swappiness=0

vm.overcommit_memory=1

vm.panic_on_oom=0

fs.inotify.max_user_instances=8192

fs.inotify.max_user_watches=1048576

fs.file-max=52706963

fs.nr_open=52706963

net.ipv6.conf.all.disable_ipv6=1

net.netfilter.nf_conntrack_max=2310720

EOF

sysctl -p /etc/sysctl.d/kubernetes.conf同步时间(k8s-node):

ntpdate 0.asia.pool.ntp.org

timedatectl set-timezone Asia/Shanghai

timedatectl set-local-rtc 0

systemctl restart rsyslog

systemctl restart crond

# k8s-deploy上执行

hostname=(k8s-node04 k8s-node05)

for node in ${hostname[@]}

do

echo ${node}

ssh root@${node} "date"

done创建相关的目录(k8s-node):

mkdir -p /opt/k8s/{bin,work}

mkdir -p /etc/{kubernetes,etcd}/cert升级内核(k8s-node):

rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-3.el7.elrepo.noarch.rpm

cat /boot/grub2/grub.cfg | grep initrd16

yum --enablerepo=elrepo-kernel install kernel-ml

grub2-set-default 0

grub2-mkconfig -o /boot/grub2/grub.cfg

reboot

# k8s-deploy上执行

hostname=(k8s-node04 k8s-node05)

for node in ${hostname[@]}

do

echo ${node}

ssh root@${node} "uname -r"

done复制变量文件(k8s-deploy):

cd /opt/k8s/bin/

cp environment.sh environment-add.sh编辑environment-add.sh文件,修改如下内容:

# 集群NODE IP 数组

export NODE_NODE_IPS=(10.1.104.208 10.1.104.209)

# 集群NODE IP 对应的主机名数组

export NODE_NODE_NAMES=(k8s-node04 k8s-node05)分发变量文件(k8s-deploy):

cd /opt/k8s/work/

source environment-add.sh

for node_ip in ${NODE_NODE_IPS[@]}

do

echo ">>> ${node_ip}"

scp environment-add.sh root@${node_ip}:/opt/k8s/bin/

ssh root@${node_ip} "chmod +x /opt/k8s/bin/*"

done分发CA证书和密钥

分发CA证书文件(k8s-deploy):

cd /opt/k8s/work

source /opt/k8s/bin/environment-add.sh

for node_ip in ${NODE_NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "mkdir -p /etc/kubernetes/cert"

scp ca*.pem ca-config.json root@${node_ip}:/etc/kubernetes/cert

done部署kubectl命令行工具

部署kubectl工具及分发到各节点(k8s-deploy):

cd /opt/k8s/work

source /opt/k8s/bin/environment-add.sh

for node_ip in ${NODE_NODE_IPS[@]}

do

echo ">>> ${node_ip}"

scp kubernetes/client/bin/kubectl root@${node_ip}:/opt/k8s/bin/

ssh root@${node_ip} "chmod +x /opt/k8s/bin/*"

done分发kubeconfig到各节点(k8s-deploy):

cd /opt/k8s/work

source /opt/k8s/bin/environment-add.sh

for node_ip in ${NODE_NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "mkdir -p ~/.kube"

scp kubectl.kubeconfig root@${node_ip}:~/.kube/config

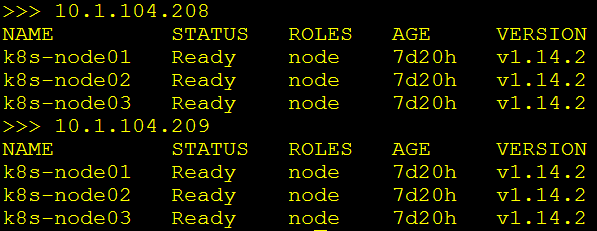

done检验kubeconfig配置(k8s-deploy):

cd /opt/k8s/work

source /opt/k8s/bin/environment-add.sh

for node_ip in ${NODE_NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "kubectl get node"

done

部署flannel网络

获取flannel并分发到各节点(k8s-deploy):

cd /opt/k8s/work,进入k8s工作目录。

source /opt/k8s/bin/environment-add.sh

for node_ip in ${NODE_NODE_IPS[@]}

do

echo ">>> ${node_ip}"

scp flannel/{flanneld,mk-docker-opts.sh} root@${node_ip}:/opt/k8s/bin/

ssh root@${node_ip} "chmod +x /opt/k8s/bin/*"

done分发flannel证书和私钥到各节点(k8s-deploy):

cd /opt/k8s/work

source /opt/k8s/bin/environment-add.sh

for node_ip in ${NODE_NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "mkdir -p /etc/flanneld/cert"

scp flanneld*.pem root@${node_ip}:/etc/flanneld/cert

done分发flannel的服务文件到各节点(k8s-deploy):

cd /opt/k8s/work

source /opt/k8s/bin/environment-add.sh

for node_ip in ${NODE_NODE_IPS[@]}

do

echo ">>> ${node_ip}"

scp flanneld.service root@${node_ip}:/etc/systemd/system/

done启动各节点的flannel服务并检验是否启动成功(k8s-deploy):

cd /opt/k8s/work

source /opt/k8s/bin/environment-add.sh

for node_ip in ${NODE_NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "systemctl daemon-reload && systemctl enable flanneld && systemctl restart flanneld"

done

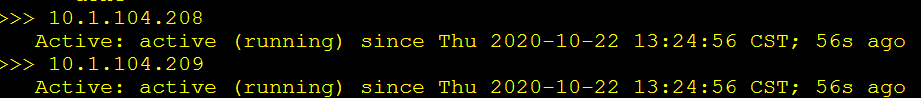

for node_ip in ${NODE_NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "systemctl status flanneld | grep Active"

done

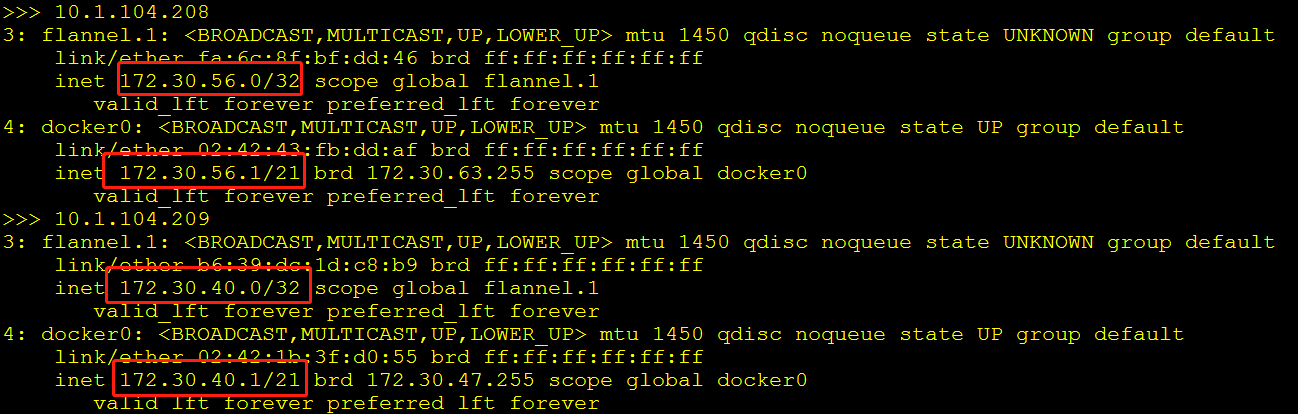

验证各节点上的flannel状态(k8s-deploy):

cd /opt/k8s/work

source /opt/k8s/bin/environment-add.sh

etcdctl \

--endpoints=${ETCD_ENDPOINTS} \

--ca-file=/opt/k8s/work/ca.pem \

--cert-file=/opt/k8s/work/flanneld.pem \

--key-file=/opt/k8s/work/flanneld-key.pem \

ls ${FLANNEL_ETCD_PREFIX}/subnets

- 查看创建的pod子网,每个节点的flannel都会创建一个子网。

验证各节点能通过pod网络互通(k8s-deploy):

cd /opt/k8s/work

source /opt/k8s/bin/environment-add.sh

for node_ip in ${NODE_NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh ${node_ip} "/usr/sbin/ip addr show flannel.1|grep -w inet"

done

for node_ip in ${NODE_NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh ${node_ip} "ping -c 1 172.30.192.0"

ssh ${node_ip} "ping -c 1 172.30.160.0"

ssh ${node_ip} "ping -c 1 172.30.168.0"

ssh ${node_ip} "ping -c 1 172.30.112.0"

ssh ${node_ip} "ping -c 1 172.30.200.0"

ssh ${node_ip} "ping -c 1 172.30.176.0"

ssh ${node_ip} "ping -c 1 172.30.56.0"

ssh ${node_ip} "ping -c 1 172.30.40.0"

done分发各节点的二进制文件

分发kubernetes二进制文件到各节点(k8s-deploy):

cd /opt/k8s/work

source /opt/k8s/bin/environment-add.sh

for node_ip in ${NODE_NODE_IPS[@]}

do

echo ">>> ${node_ip}"

scp kubernetes/server/bin/{apiextensions-apiserver,cloud-controller-manager,kube-apiserver,kube-controller-manager,kube-proxy,kube-scheduler,kubeadm,kubectl,kubelet,mounter} root@${node_ip}:/opt/k8s/bin/

ssh root@${node_ip} "chmod +x /opt/k8s/bin/*"

done部署worker节点(docker)

安装worker节点需要的依赖包(k8s-deploy):

cd /opt/k8s/work

source /opt/k8s/bin/environment-add.sh

for node_ip in ${NODE_NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "yum install -y epel-release"

ssh root@${node_ip} "yum install -y conntrack ipvsadm ntp ntpdate ipset jq iptables curl sysstat libseccomp && modprobe ip_vs"

done分发docker二进制文件、配置文件及服务文件到各节点(k8s-deploy):

cd /opt/k8s/work

source /opt/k8s/bin/environment-add.sh

for node_ip in ${NODE_NODE_IPS[@]}

do

echo ">>> ${node_ip}"

scp docker/* root@${node_ip}:/opt/k8s/bin/

ssh root@${node_ip} "chmod +x /opt/k8s/bin/*"

done

for node_ip in ${NODE_NODE_IPS[@]}

do

echo ">>> ${node_ip}"

scp docker.service root@${node_ip}:/etc/systemd/system/

done

for node_ip in ${NODE_NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "mkdir -p /etc/docker/ ${DOCKER_DIR}/{data,exec}"

scp docker-daemon.json root@${node_ip}:/etc/docker/daemon.json

done启动docker并检测运行状态(k8s-deploy):

cd /opt/k8s/work

source /opt/k8s/bin/environment-add.sh

for node_ip in ${NODE_NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "systemctl daemon-reload && systemctl enable docker && systemctl restart docker"

done

for node_ip in ${NODE_NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "systemctl status docker|grep Active"

done检测docker0和flannel是否在同一网段中:

cd /opt/k8s/work

source /opt/k8s/bin/environment-add.sh

for node_ip in ${NODE_NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "/usr/sbin/ip addr show flannel.1 && /usr/sbin/ip addr show docker0"

done

部署worker节点(docker)

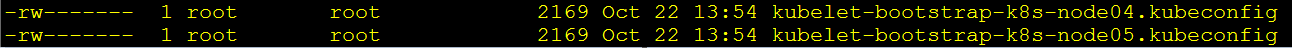

分发kubelet的配置文件到各节点(k8s-deploy):

cd /opt/k8s/work

source /opt/k8s/bin/environment-add.sh

for node_name in ${NODE_NODE_NAMES[@]}

do

echo ">>> ${node_name}"

export BOOTSTRAP_TOKEN=$(/opt/k8s/work/kubernetes/server/bin/kubeadm token create \

--description kubelet-bootstrap-token \

--groups system:bootstrappers:${node_name} \

--kubeconfig kubectl.kubeconfig)

kubectl config set-cluster kubernetes \

--certificate-authority=/opt/k8s/work/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kubelet-bootstrap-${node_name}.kubeconfig

kubectl config set-credentials kubelet-bootstrap \

--token=${BOOTSTRAP_TOKEN} \

--kubeconfig=kubelet-bootstrap-${node_name}.kubeconfig

kubectl config set-context default \

--cluster=kubernetes \

--user=kubelet-bootstrap \

--kubeconfig=kubelet-bootstrap-${node_name}.kubeconfig

kubectl config use-context default --kubeconfig=kubelet-bootstrap-${node_name}.kubeconfig

done

查看kubeadm为各节点创建的token文件并分发到各节点:

/opt/k8s/work/kubernetes/server/bin/kubeadm token list --kubeconfig kubectl.kubeconfig

cd /opt/k8s/work

source /opt/k8s/bin/environment-add.sh

for node_name in ${NODE_NODE_NAMES[@]}

do

echo ">>> ${node_name}"

scp kubelet-bootstrap-${node_name}.kubeconfig root@${node_name}:/etc/kubernetes/kubelet-bootstrap.kubeconfig

done分发kubelet的参数配置文件到各节点(k8s-deploy):

cd /opt/k8s/work

source /opt/k8s/bin/environment-add.sh

for node_ip in ${NODE_NODE_IPS[@]}

do

echo ">>> ${node_ip}"

sed -e "s/##NODE_IP##/${node_ip}/" kubelet-config.yaml.template > kubelet-config-${node_ip}.yaml.template

scp kubelet-config-${node_ip}.yaml.template root@${node_ip}:/etc/kubernetes/kubelet-config.yaml

done分发kubelet的服务文件到各节点(k8s-deploy):

cd /opt/k8s/work

source /opt/k8s/bin/environment-add.sh

for node_name in ${NODE_NODE_NAMES[@]}

do

echo ">>> ${node_name}"

sed -e "s/##NODE_NAME##/${node_name}/" kubelet.service.template > kubelet-${node_name}.service

scp kubelet-${node_name}.service root@${node_name}:/etc/systemd/system/kubelet.service

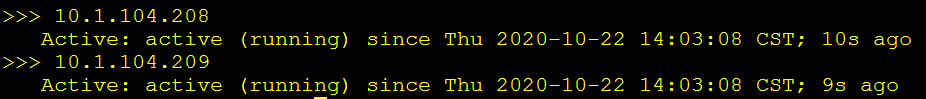

done启动kubelet并验证(k8s-deploy):

cd /opt/k8s/work

source /opt/k8s/bin/environment-add.sh

for node_ip in ${NODE_NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "mkdir -p ${K8S_DIR}/kubelet/kubelet-plugins/volume/exec/"

ssh root@${node_ip} "/usr/sbin/swapoff -a"

ssh root@${node_ip} "systemctl daemon-reload && systemctl enable kubelet && systemctl restart kubelet"

done

for node_ip in ${NODE_NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "systemctl status kubelet | grep Active"

done

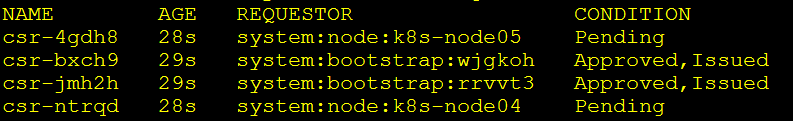

批准kubelet请求(k8s-master):

kubectl get csr

# 如果有多个未确认的节点,则需要授权多次。

kubectl certificate approve csr-4gdh8

- bootstrap证书设置过自动批准策略,因此只需要手动批准客户端的申请。

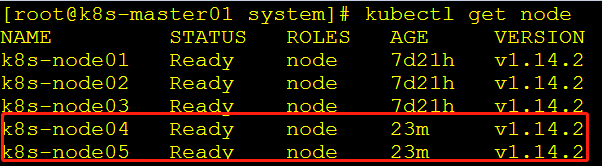

验证kubelet可用性(k8s-master):

# 为新节点打上node的标签

kubectl label nodes k8s-node04 node-role.kubernetes.io/node=

kubectl get node

curl -s --cacert /opt/k8s/work/ca.pem \

--cert /opt/k8s/work/admin.pem \

--key /opt/k8s/work/admin-key.pem \

https://10.1.104.208:10250/metrics

部署kube-proxy

分发kube-proxy的证书文件到各节点(k8s-deploy):

cd /opt/k8s/work

source /opt/k8s/bin/environment-add.sh

for node_name in ${NODE_NODE_NAMES[@]}

do

echo ">>> ${node_name}"

scp kube-proxy.kubeconfig root@${node_name}:/etc/kubernetes/

done分发kube-proxy的配置文件到各节点(k8s-deploy):

cd /opt/k8s/work

source /opt/k8s/bin/environment-add.sh

for (( i=0; i < 2; i++ ))

do

echo ">>> ${NODE_NODE_NAMES[i]}"

sed -e "s/##NODE_NAME##/${NODE_NODE_NAMES[i]}/" -e "s/##NODE_IP##/${NODE_NODE_IPS[i]}/" kube-proxy-config.yaml.template > kube-proxy-config-${NODE_NODE_NAMES[i]}.yaml.template

scp kube-proxy-config-${NODE_NODE_NAMES[i]}.yaml.template root@${NODE_NODE_NAMES[i]}:/etc/kubernetes/kube-proxy-config.yaml

done- 此处需要用到循环,有几个节点 i 就设置为几,比如新增2个节点,就设置为 i < 2。

分发kube-proxy的服务文件到各节点(k8s-deploy):

cd /opt/k8s/work,进入k8s工作目录。

source /opt/k8s/bin/environment-add.sh

for node_name in ${NODE_NODE_NAMES[@]}

do

echo ">>> ${node_name}"

scp kube-proxy.service root@${node_name}:/etc/systemd/system/

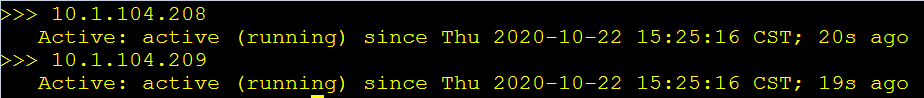

done启动kube-proxy并验证(k8s-deploy):

cd /opt/k8s/work

source /opt/k8s/bin/environment-add.sh

for node_ip in ${NODE_NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "mkdir -p ${K8S_DIR}/kube-proxy"

ssh root@${node_ip} "modprobe ip_vs_rr"

ssh root@${node_ip} "systemctl daemon-reload && systemctl enable kube-proxy && systemctl restart kube-proxy"

done

for node_ip in ${NODE_NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "systemctl status kube-proxy | grep Active"

done

检验新加节点正常工作

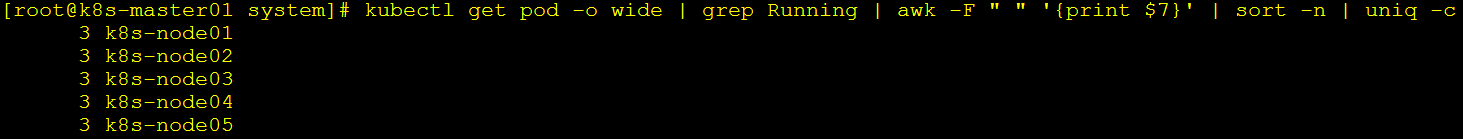

扩容nginx服务并检查节点分布(k8s-master):

kubectl scale deployment daemon-nginx-deployment --replicas=10

kubectl get pod -o wide | grep Running | awk -F " " '{print $7}' | sort -n | uniq -c